Comwork AI

Translations

This tutorial is also available in the following languages:

Purpose

This feature aims to expose AI1 models such as NLP2 or LLM3 to be exposed as an API.

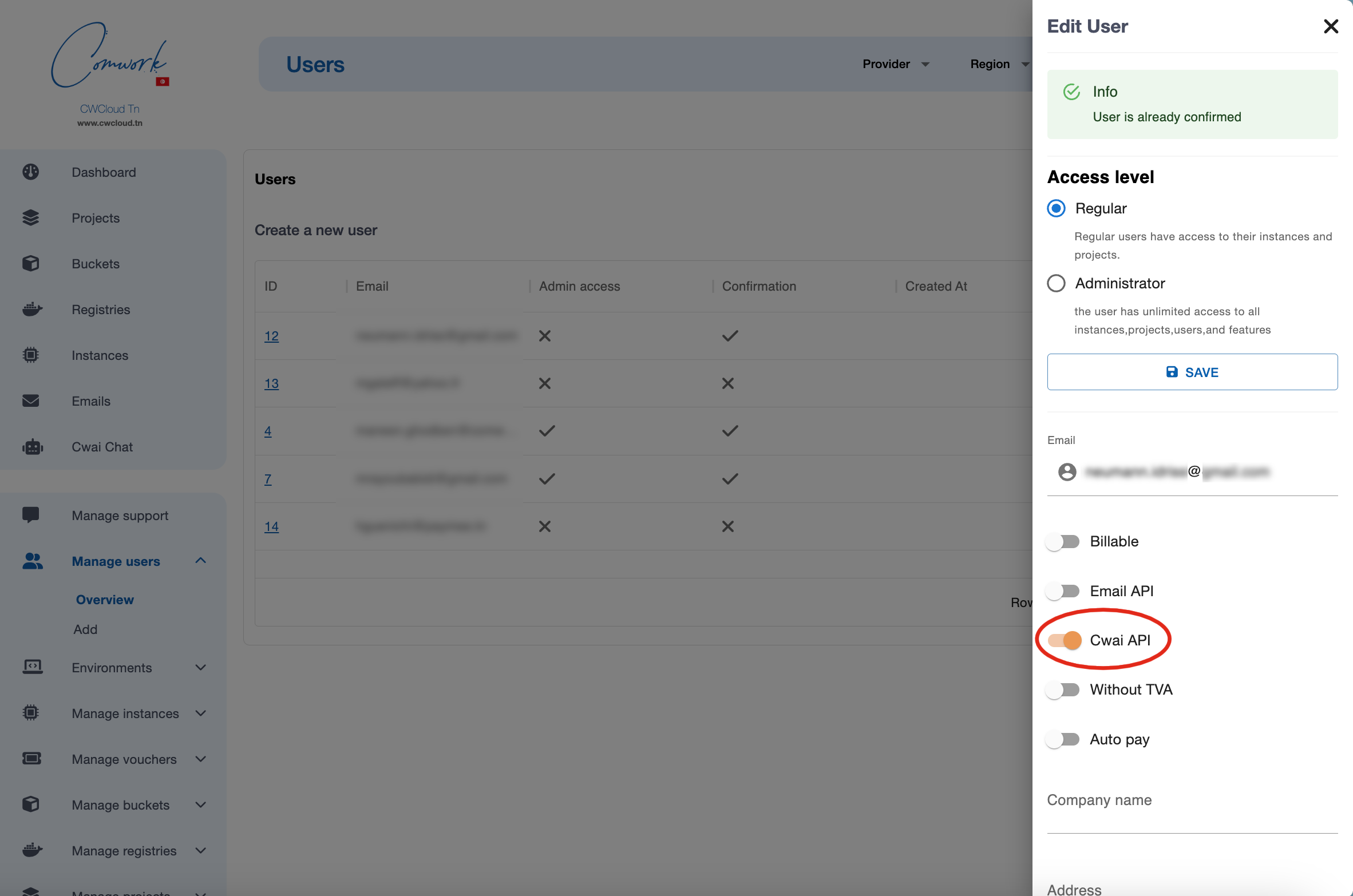

Enabling this API

In the SaaS version, you can ask to be granted using the support system.

If you're admin of the instance, you can grant users like this:

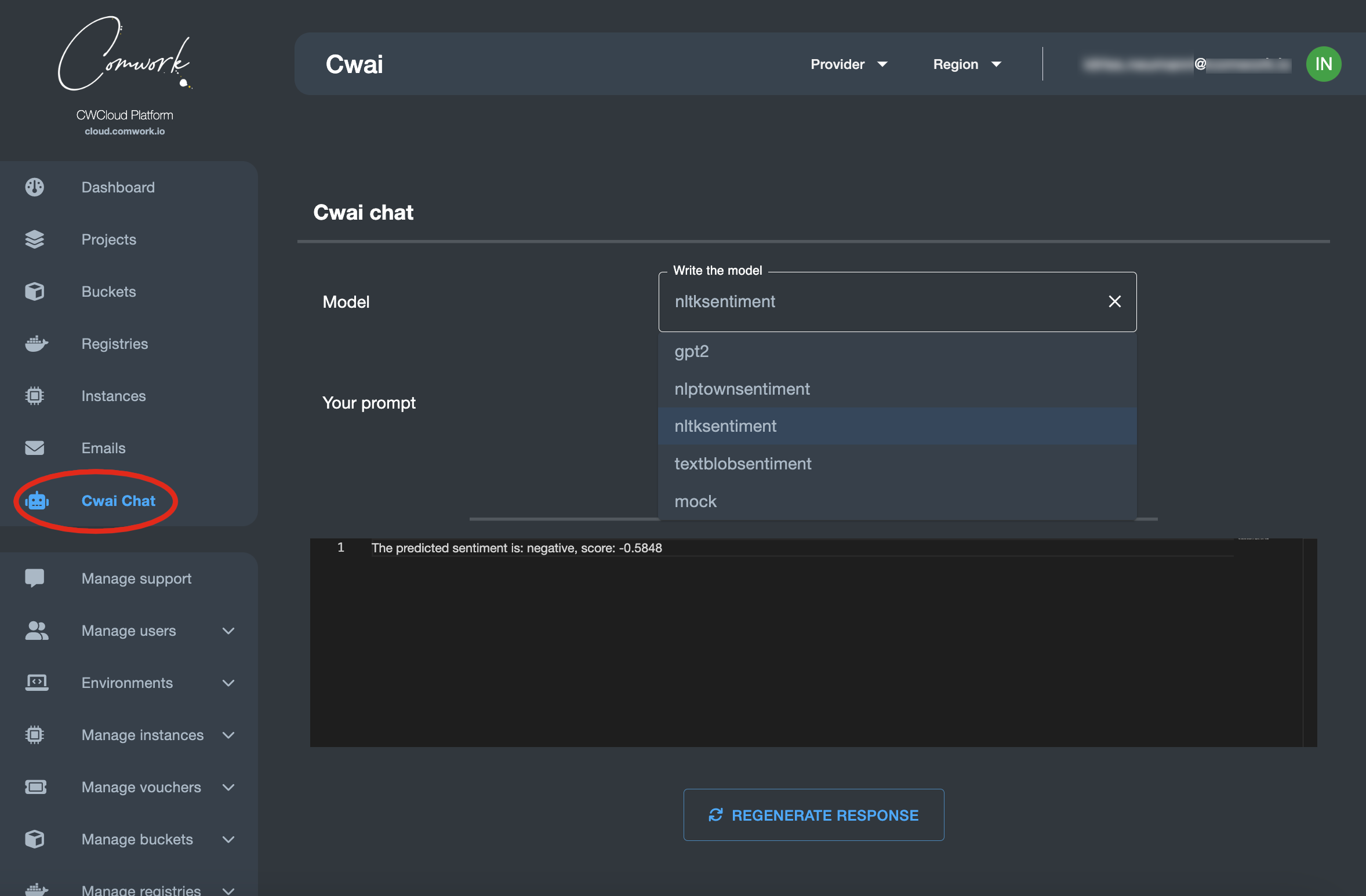

UI chat

Once you're enabled, you can try the CWAI api using this chat web UI:

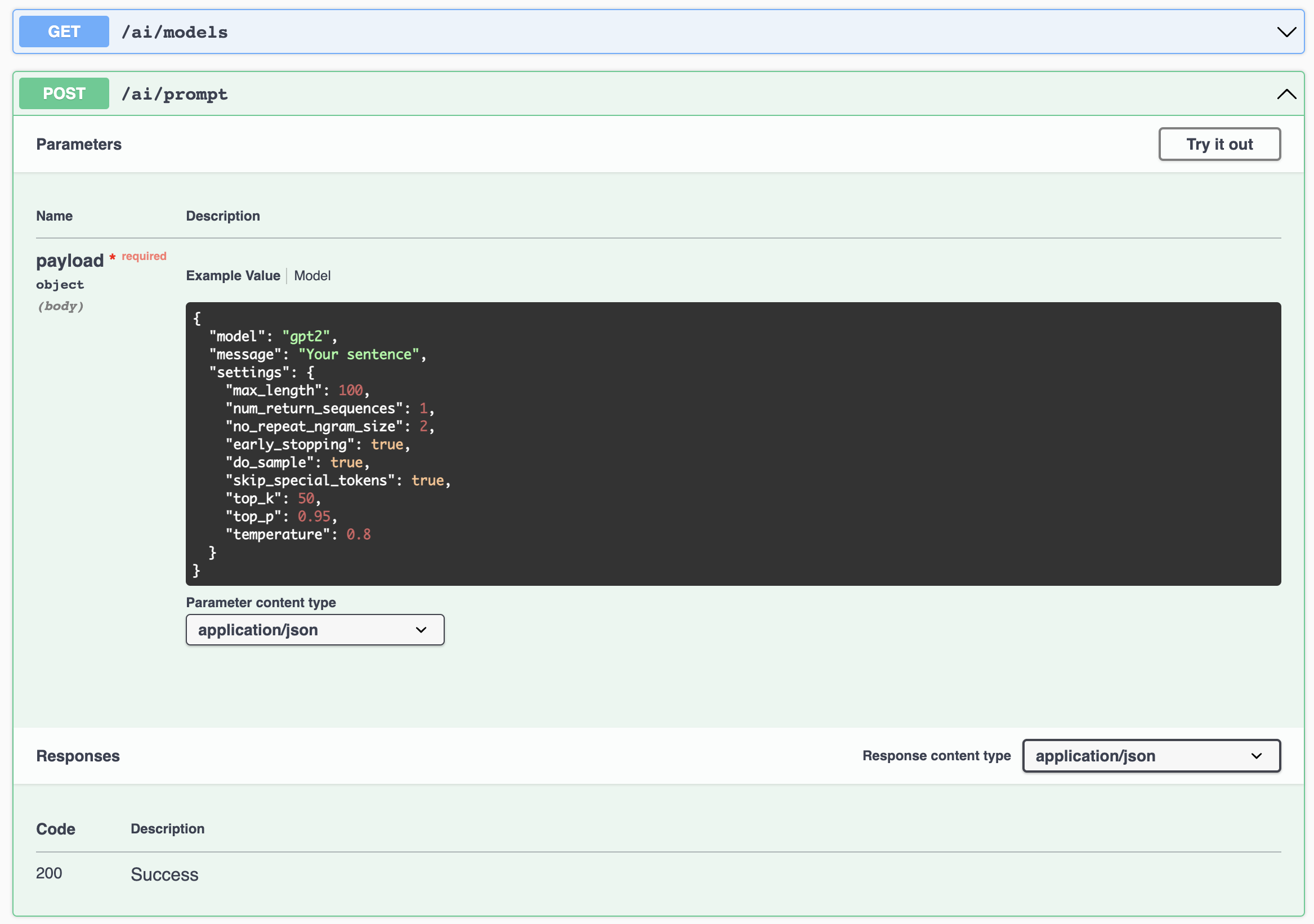

Use the API

Of course, the main purpose is to be able to interact with those model using very simple http endpoints:

Here's how to get all the available models:

curl -X 'GET' 'https://api.cwcloud.tech/v1/ai/models' -H 'accept: application/json' -H 'X-Auth-Token: XXXXXX'

Result:

{

"models": [

"gpt2",

"nlptownsentiment",

"mock"

],

"status": "ok"

}

Then prompting with one of the available models:

curl -X 'POST' \

'https://api.cwcloud.tech/v1/ai/prompt' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-H 'X-Auth-Token: XXXXXX' \

-d '{

"model": "nlptownsentiment",

"message": "This is bad !",

"settings": {}

}'

The answer would be:

{

"response": [

"The predicted emotion is: Anger"

],

"score": 1,

"status": "ok"

}

Notes:

- you have to replace the

XXXXXXvalue with your own token generated with this procedure. - you can replace

https://api.cwcloud.techby the API's instance URL you're using, with theCWAI_API_URLenvironment variable. For the Tunisian customers for example, it would behttps://api.cwcloud.tn.

Use the CLI

You can use the cwc CLI which provide a subcommand ai:

cwc ai

This command lets you call the CWAI endpoints

Usage:

cwc ai

cwc ai [command]

Available Commands:

models Get the available models

prompt Send a prompt

Flags:

-h, --help help for ai

Use "cwc ai [command] --help" for more information about a command.

List the available models

cwc ai models

Models

[gpt2 nlptownsentiment nltksentiment textblobsentiment mock]

Send a prompt to an available model

cwc ai prompt

Error: required flag(s) "message", "model" not set

Usage:

cwc ai prompt [flags]

Flags:

-h, --help help for prompt

-m, --message string The message input

-t, --model string The chosen model

cwc ai prompt --model nltksentiment --message "This is bad"

Status Response Score

ok [The predicted sentiment is: negative, score: -0.5423] -0.5423

Load a model asynchronously

cwc ai load [model]

Error: accepts 1 arg(s), received 0

Usage:

cwc ai load [model] [flags]

Flags:

-h, --help help for load

exit status 1

Driver interface

This section is for contributors who wants to add new models.

You can implement you're own adapter that will load and generate answer from models implementing this abstract1:

class ModelAdapter(ABC):

@abstractmethod

def load_model(self):

pass

@abstractmethod

def generate_response(self, prompt: Prompt):

pass

The you have to update this list2 with your new adapter:

_default_models = [

'gpt2',

'nlptownsentiment',

'nltksentiment',

'textblobsentiment',

'robertaemotion',

'log'

]